Azure SQL

This page will guide you through the setup of Azure SQL in K using the direct connect method.

Integration details

Scope | Included | Comments |

|---|---|---|

Metadata | YES | |

Lineage | YES | Requires logging to be enabled |

Usage | YES | |

Sensitive Data Scanner | NO | Sensitive data scanner does not currently support Azure SQL |

Known limitations

Queries, macros and procedures must include fully qualified names in order to be correctly parsed.

Step 1) Azure SQL Access

Setting up Azure SQL for metadata extraction is a 2 step process.

Step 1: Establish SQLServer Access

Apply in MASTER using an Azure SQL Admin user replacing kadauser and PASSWORD with your choice of username and password

CREATE LOGIN kadauser WITH password='PASSWORD';

CREATE USER kadauser FROM LOGIN kadauser;

Apply per database in scope for metadata collection.

CREATE USER kadauser FROM LOGIN kadauser;

GRANT VIEW DEFINITION TO kadauser;

GRANT VIEW DATABASE STATE to kadauser;

GRANT CONTROL to kadauser; -- required for extended events sys.fn_xe_file_target_read_fileThe following table should also be available to SELECT by the user created in each database

INFORMATION_SCHEMA.ROUTINES

INFORMATION_SCHEMA.VIEWS

INFORMATION_SCHEMA.TABLE_CONSTRAINTS

INFORMATION_SCHEMA.CONSTRAINT_COLUMN_USAGE

INFORMATION_SCHEMA.TABLES

INFORMATION_SCHEMA.COLUMNS

INFORMATION_SCHEMA.VIEWS

sys.foreign_key_columns

sys.objects

sys.tables

sys.schemas

sys.columns

sys.databases

Step 2: Setup Extended Event Logging

Extended Events Setup is in pilot for Azure SQL

An Azure SQL Admin will need to setup an extended events process to capture Query Execution in Azure SQL.

Some tuning of the logging parameters may be needed depending on event volumes generated on your Azure SQL instance.

First create or reuse an existing Azure Storage Account.

Then create a blob in the example the blob is called extended-events

Run the following script to setup Extended Events logging.

Apply per database in scope for metadata collection.

CREATE MASTER KEY ENCRYPTION BY PASSWORD = '<REPLACE with your key: abc1234>';

CREATE DATABASE SCOPED CREDENTIAL [https://your.blob.core.windows.net/extended-events]

WITH IDENTITY='SHARED ACCESS SIGNATURE',

SECRET = '< REPLACE WITH YOUR SAS TOKEN: sp=racwdl ...>';

-- Make sure this file name is unique per database: ADD TARGET package0.event_file (SET filename = N'...'

CREATE EVENT SESSION [KADA] ON DATABASE

ADD EVENT sqlserver.sp_statement_completed (

ACTION(package0.event_sequence, sqlserver.client_app_name, sqlserver.client_hostname, sqlserver.database_id, sqlserver.database_name, sqlserver.query_hash, sqlserver.session_id, sqlserver.transaction_id, sqlserver.username) WHERE (

(

[statement] LIKE '%CREATE %'

OR [statement] LIKE '%DROP %'

OR [statement] LIKE '%MERGE %'

OR [statement] LIKE '%FROM %'

)

--AND [sqlserver].[server_principal_name] <> N'USERS_TO_EXCLUDE'

AND [sqlserver].[is_system] = (0)

AND NOT [statement] LIKE 'Insert into % Values %'

AND [sqlserver].[Query_hash] <> (0)

)

),

ADD EVENT sqlserver.sql_statement_completed (

SET collect_statement = (1) ACTION(package0.event_sequence, sqlserver.client_app_name, sqlserver.client_hostname, sqlserver.database_id, sqlserver.database_name, sqlserver.query_hash, sqlserver.session_id, sqlserver.transaction_id, sqlserver.username) WHERE (

(

[statement] LIKE '%CREATE %'

OR [statement] LIKE '%DROP %'

OR [statement] LIKE '%MERGE %'

OR [statement] LIKE '%FROM %'

)

--AND [sqlserver].[server_principal_name] <> N'N'USERS_TO_EXCLUDE'

AND [sqlserver].[is_system] = (0)

AND NOT [statement] LIKE 'Insert into % Values %'

AND [sqlserver].[Query_hash] <> (0)

)

) ADD TARGET package0.event_file (SET filename = N'https://your.blob.core.windows.net/extended-events/<REPLACE with your db name: database1>.xel')

WITH (MAX_MEMORY = 4096 KB, EVENT_RETENTION_MODE = ALLOW_MULTIPLE_EVENT_LOSS, MAX_DISPATCH_LATENCY = 30 SECONDS, MAX_EVENT_SIZE = 0 KB, MEMORY_PARTITION_MODE = NONE, TRACK_CAUSALITY = ON, STARTUP_STATE = ON)

GO

Step 2) Create the Source in K

Go to Settings, Select Sources and click Add Source

Select “Load from File” option

Click Add Source and select Azure SQL

Select Direct Connect and add your Azure SQL details and click Next

Fill in the Source Settings and click Next

Name: The name you wish to give your Azure SQL Server

Host: Add the server location for the Azure SQL Server instance

Version number: Set the Azure SQL Server version

Extract Meta Only: Set this if extended events is not enabled

Add the Connection details and click Save & Next when connection is successful

Host: Add the Azure SQL Server location

Username: Add the Azure SQL Server User created in Step 1

Password: Add the User password created in Step 1

Test your connection and click Save

Return to the Sources page and locate the new Azure SQL Server source that you created

Click on the clock icon to select Edit Schedule and set your preferred schedule for the Azure SQL Server load

Note that scheduling a source can take up to 15 minutes to propagate the change.

Step 3) Manually run an ad hoc load to test SQL Server setup

Next to your new Source, click on the Run manual load icon

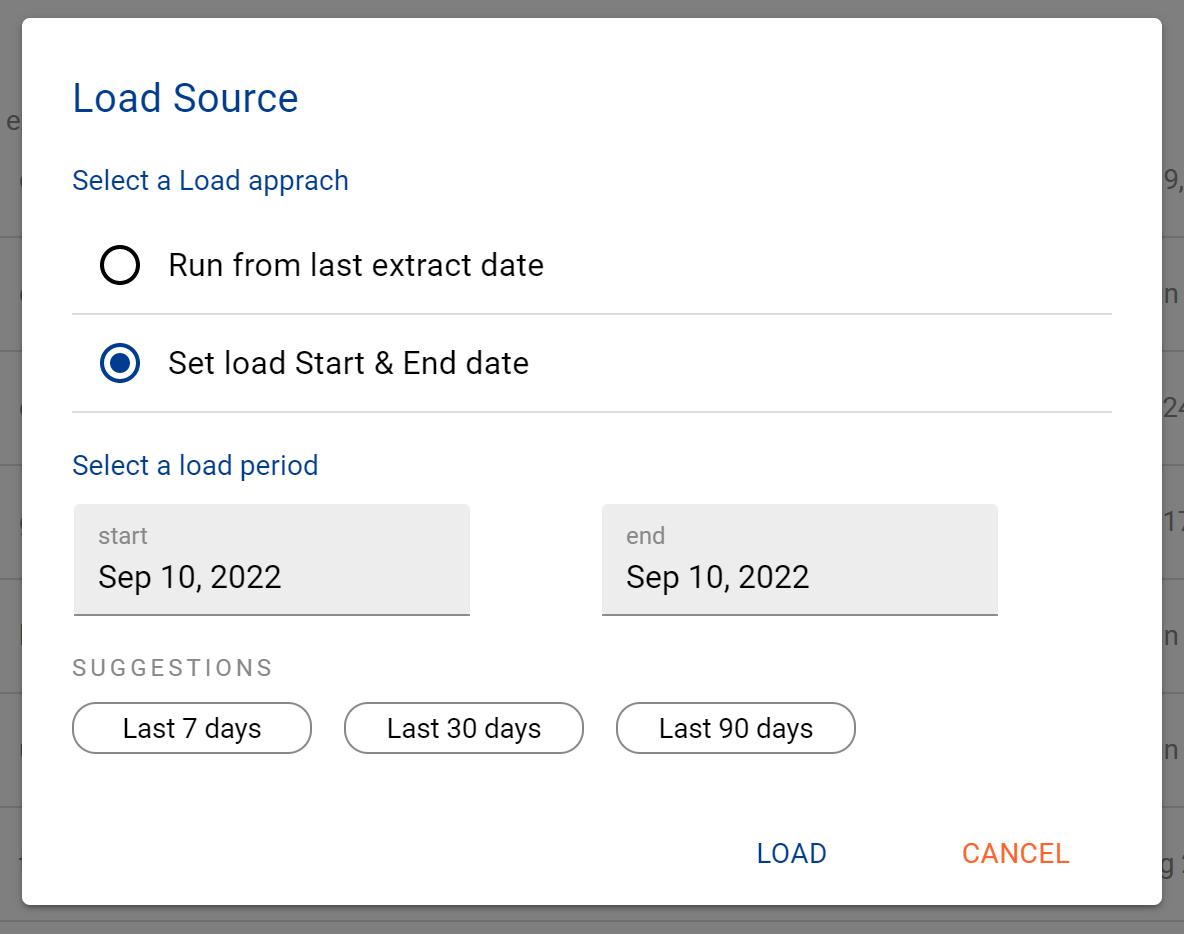

Confirm how you want the source to be loaded

After the source load is triggered, a pop up bar will appear taking you to the Monitor tab in the Batch Manager page. This is the usual page you visit to view the progress of source loads

A manual source load will also require a manual run of

DAILY

GATHER_METRICS_AND_STATS

To load all metrics and indexes with the manually loaded metadata. These can be found in the Batch Manager page

Troubleshooting failed loads

If the job failed at the extraction step

Check the error. Contact KADA Support if required.

Rerun the source job

If the job failed at the load step, the landing folder failed directory will contain the file with issues.

Find the bad record and fix the file

Rerun the source job